NVIDIA finds itself at the axis of a hugely valuable market with growing need for AI and thinking machines. If you’ve seen the ‘Terminator’ movie and remember the company called Cyberdyne Systems, the creator of Skynet, you’ll remember they were building a new chip that enabled computers to think. NVIDIA (@nvidia) are the closest thing to the fictitious Cyberdyne today.

In the past, the only people that knew anything about NVIDIA was a small group of gamers and developers that depended on NVIDIA graphics cards and chips. Today, NVIDIAs stock price is reaching new highs, as everyone from car companies, medical imaging firms and robotics companies can’t get enough of the chips and services this company provides. I recently spent some time with the company at their annual meeting.

It seems like NVIDIA was at the right place at the right time; an AI revolution was in place, a market in need of AI tools was ripe, and NVIDIA was there to take advantage of it. It only took them 25 years to become an overnight success, and they have left IBM Cognitive Business and AMD in the dust. As with all companies, there was a mix of luck, timing and hard work that has brought the company to where it is today. Today, NVIDIA is poised to become one of the most important suppliers of the picks and shovels to the growing Artificial Intelligence, machine learning and robotics industries globally. Computing today is changing rapidly and companies that don’t think about how AI is going to change their business in some form or another will risk being left behind.

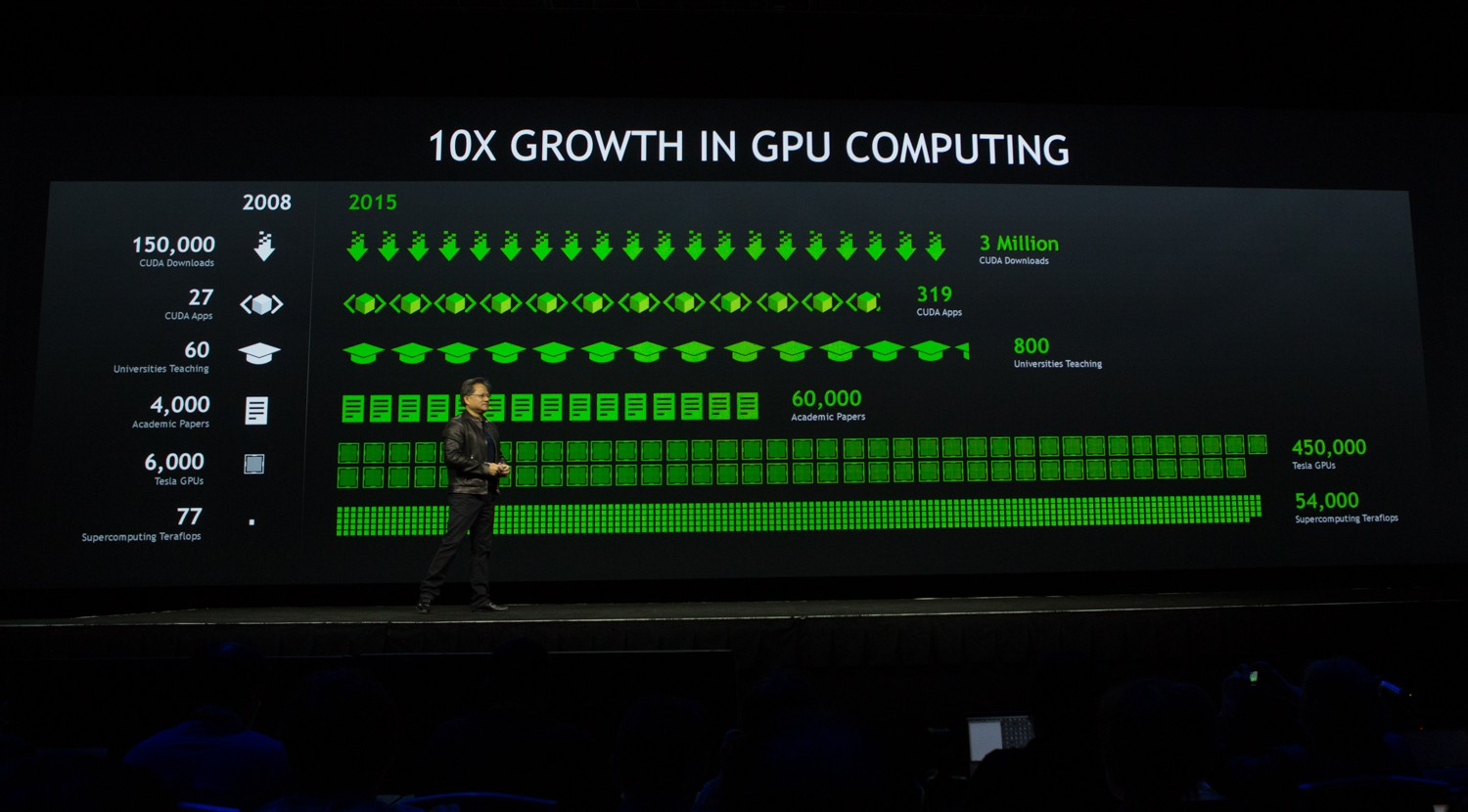

Before looking at the industries that AI and ML (Machine Learning) will change, we need to understand how NVIDIA supports this new industry. The chips that NVIDIA primarily produce are GPUs (General Processing Units). A GPU, or graphics processing unit, is used primarily for 3-D applications. It is a single-chip processor that creates lighting effects and transforms objects every time a 3D scene is redrawn. These are mathematically-intensive tasks, which otherwise, would put quite a strain on the CPU.GPU-accelerated computing is the use of a graphics processing unit (GPU) together with a CPU to accelerate deep learning and AI applications. GPU-accelerated computing offloads compute-intensive portions of the application to the GPU, while the remainder of the code still runs on the CPU. From a user’s perspective, applications simply run much faster.

As it happens, this kind of chip is extremely important for the latest AI and ML applications.

Let’s look at a few industries that rely on GPU computing today.

Self Driving Cars

Self driving cars need to ‘see’ the world around them and make judgements in real time. In order to do this, they need to make extremely fast calculations to judge speeds, distances, and accurately predict how other road users will behave. In addition to this, the GPU has to gather millions of data points collected from various sensors to create an image and then use this information to understand the path ahead. This is a hugely difficult, computationally-intensive task, which would have been impossible in a car a few years ago unless that car was towing racks of traditional servers. Nowadays, energy efficient chips can run extremely fast calculations on a card the size of a few cans of coke.

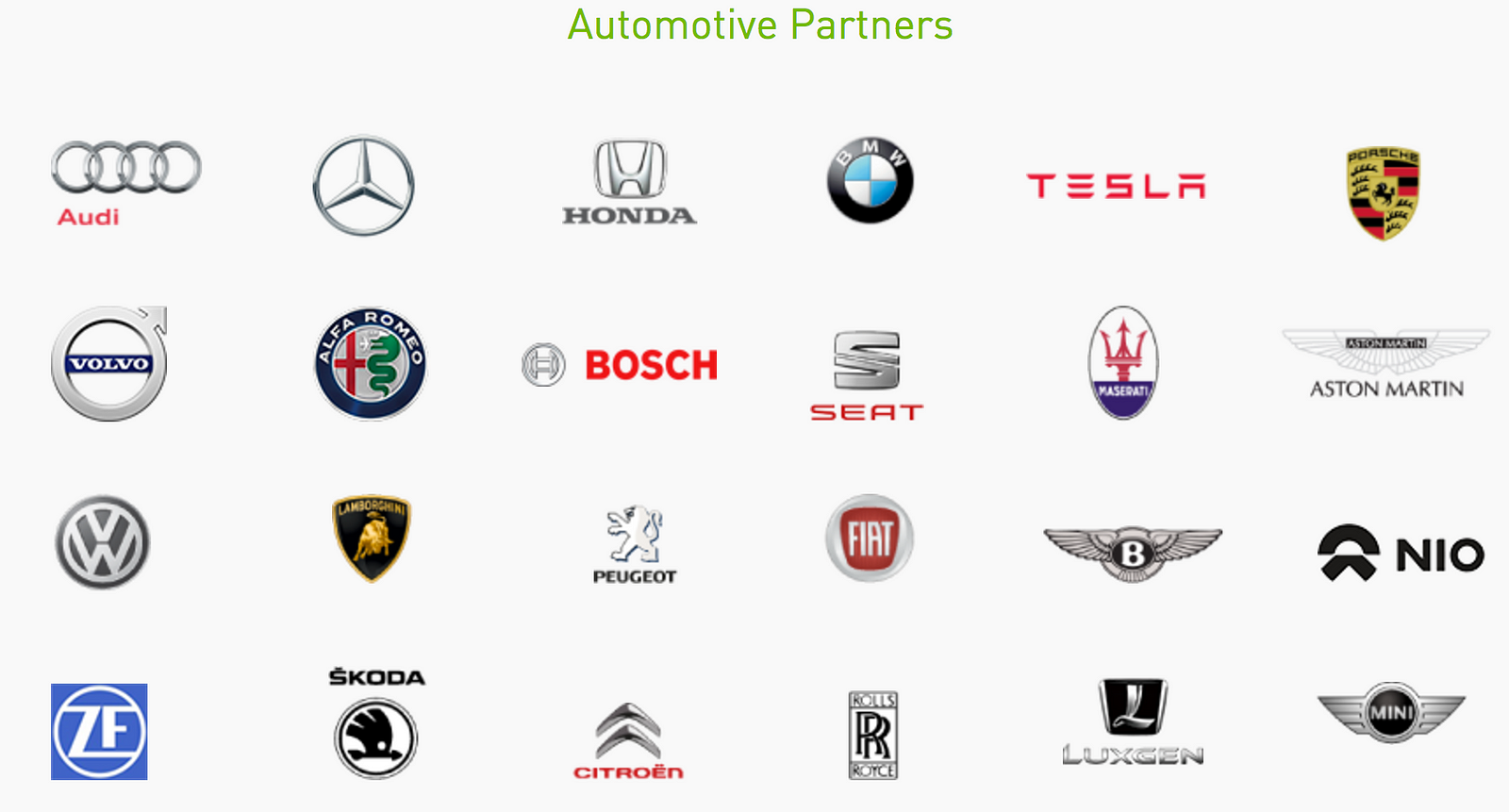

NVIDIA (@nvidiadrive) has done deals with nearly every major car manufacturer to supply chips, algorithms, and many other related products and services.

Car companies are beginning to realize that unless they make their cars smarter, from the simplest (like automatic cruise control) to the most complex (automatic driving) capabilities, they are going to lose out to their competitors. The only company that car companies are turning to is NVIDIA. IBM and AMD are being crushed when it comes to this market. If you’ve ever driven in a Tesla (Tesla Motors) and tried the autopilot, you’ll understand how important it is that computers can “see and think for themselves”.Robotics

Training robots is hard. As we can see above, not all robots can stand up to the pressures of day to day tasks like opening doors. However, most robots today perform very routine, repetitive tasks like welding, inspecting, measuring and other industrial jobs. As robots become more commonplace, they will have to perform more complicated procedures, and work alongside humans. Again, NVIDIA chips will become more and more useful, as these robots require the ability to sense more of the world around them and become smarter. As with humans, the only way to acquire skills is by practice. We humans know how to practice skills from an early age, such as learning to kick a ball or open a bottle. We learn to do it, but its not without plenty of trial and error. It’s exactly the same for robots, however these are extremely expensive and complicated machines, which don’t bruise, and for the most part, can’t pick themselves up. In addition to this, they can’t be trained around humans, as they could hurt or kill someone nearby. NVIDIA has recognized the need to teach robots and have built a robot simulator called Isaac, a simulated environment that uses algorithms and computer models to train a robot to perform a task over time. For a great explanation, listen to Jensen Huangtalking about it at their most recent conference.

Image & Voice Recognition

More and more devices are entering our homes and offices use voice and image recognition, and take action based on it. Apple were the first to bring voice recognition mainstream with Siri, and now we see Amazon investing heavily in AI and voice recognition with the Echo series. To understand how seriously they are taking this market, look at their jobs page: 987 open jobs for people with skills such as speech scientists, machine learning and artificial intelligence.

Here again, voice recognition depends on GPU processing because most voice queries are done in non optimal situations (noisy background, accents, and inferred language). Smart companies like Amazon understand that voice will become the one of the standards for inputing computer commands in the future, instead of relying on clunky fingers.

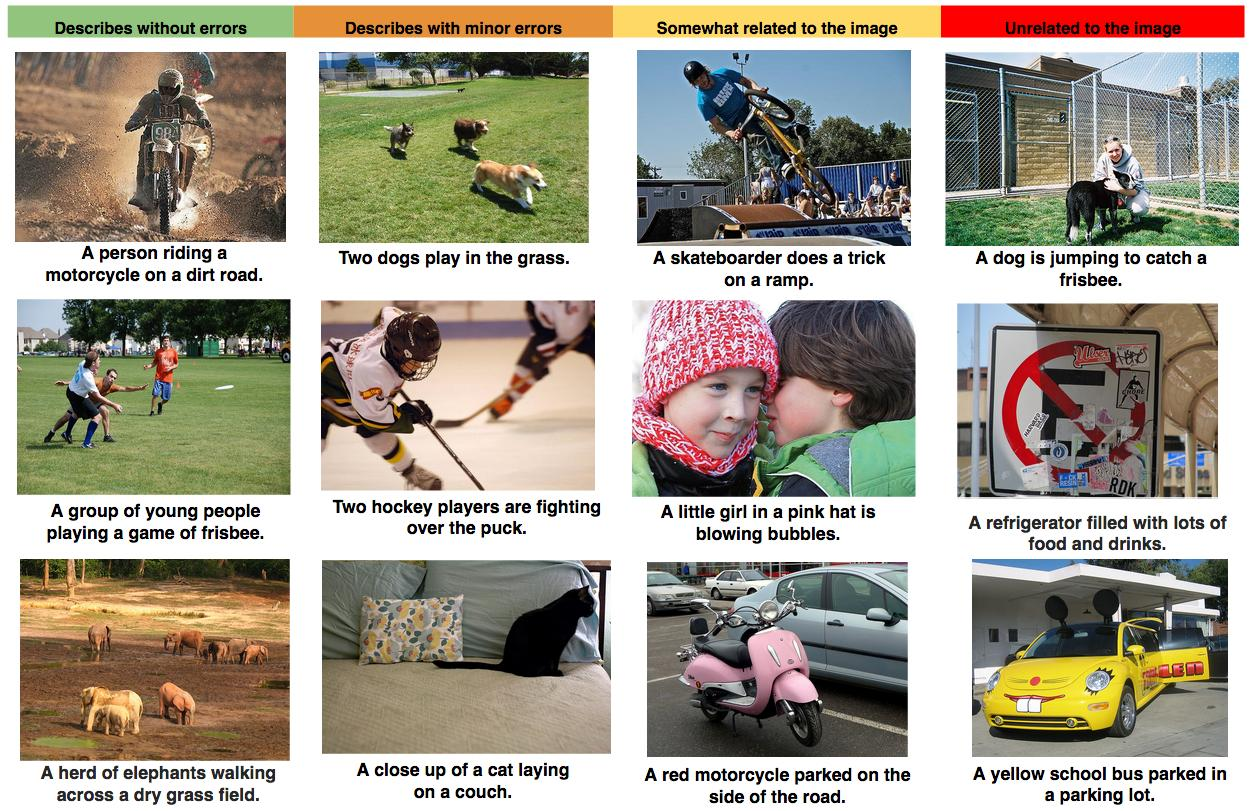

Image Recognition

Image recognition is another area that depends on GPU computing and is already making headway in many areas. If you haven’t used Google Photos, you should try it out. Be warned however, the reason Google wants you to upload a lifetime of photos for free is that they can learn so much about you from each photo. Google may not use this for advertising purposes today, but may do so in the future. Google uses image recognition to classify, identify and sort thousands of images, from which finding anything is very simple. All it takes is a simple search of “wedding” and “cat” and you’ll find everything about those categories, as well as meta data such as GPS locations, times, dates and other people in the photos, if you’ve previously tagged those people in the photos. (There is a reason that face tagging is disabled in Europe)

Another company Blippar.com (@blippar) use neural nets, machine learning and visual recognition on an app. When you point your phone camera at something, it will immediately recognize what the object is and give you more information on it. Today, it’s used by car companies to help you get a 360 degree view of new car model interiors simply by using the app on a car advertisement you see in a magazine. However, the app is also able to recognize faces of certain famous people. As you can imagine, this kind of technology could be very useful for scanning large crowds of people and searching for wanted people. It could also be adapted to be used on glasses so that the user would be able to recognize everyone on the street from their public LinkedIn or Facebook photos. Another interesting use of image recognition technology is from an Israeli company (UVeye.com). They use optical recognition to scan the underside of vehicles to search for damage or objects that should not be there, all in milliseconds, when a car passes over a camera embedded in a road.

Obviously, this brings up many questions around privacy, security and government intrusion, but that’s for another blog. There is a tradeoff between getting very useful services and giving more companies getting access to our private lives in order to deliver such services.

One thing is for sure, NVIDIA is going places, and are providing the chips that will power the future. This company is just getting started.